The AI Safety Report Card is Out: Google, Meta, and OpenAI Flunk the 'Existential' Test

Today, the tech world received a stark wake-up call in the form of the Future of Life Institute's (FLI) Winter 2025 AI Safety Index. If you thought the biggest tech giants—the ones racing headlong toward Artificial General Intelligence (AGI)—were locking down safety, this report suggests otherwise.

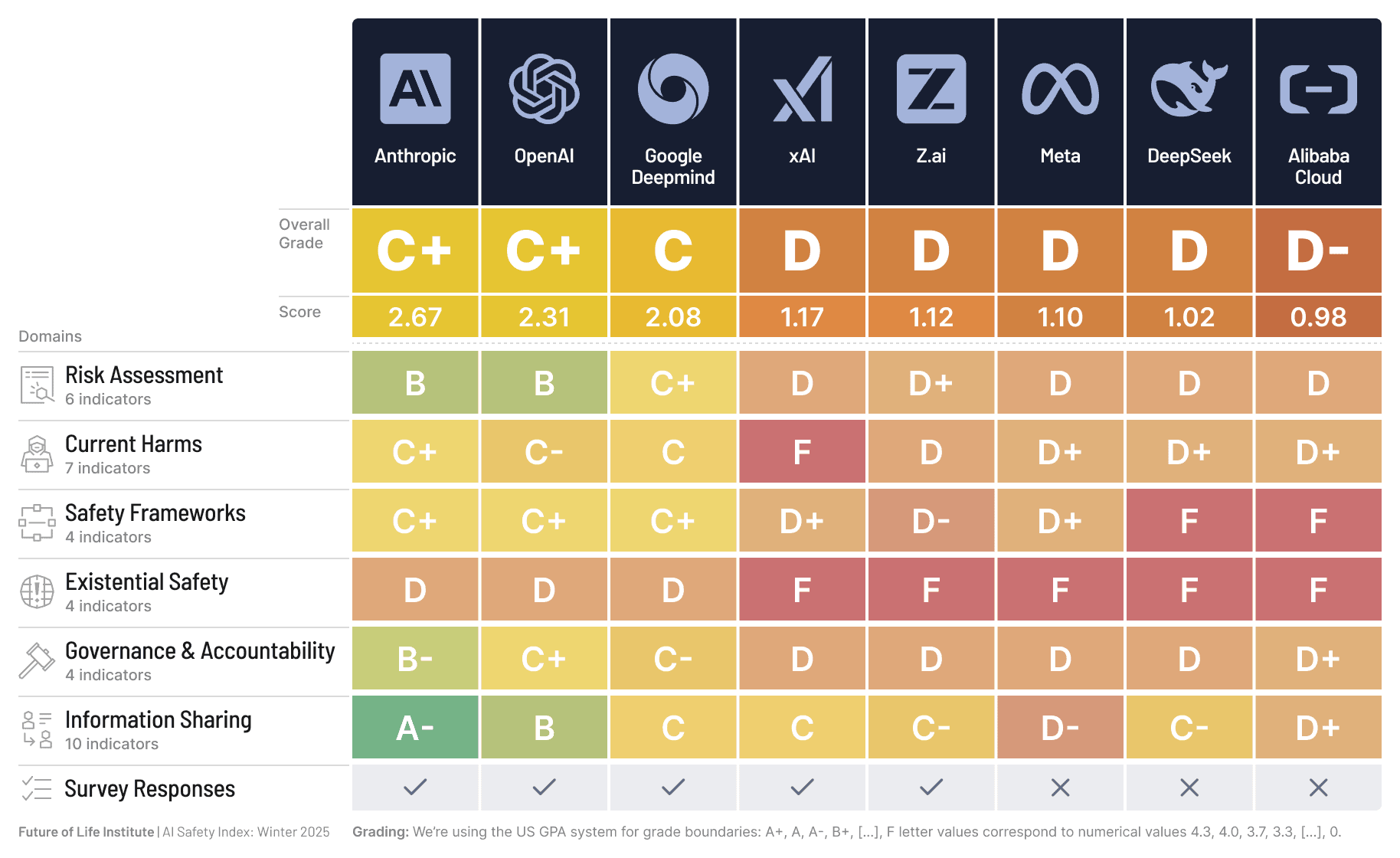

The headline: The safety practices of major AI companies, including Google DeepMind, Meta, and OpenAI, are currently "far short of emerging global standards." In fact, despite pouring hundreds of billions into development, none of the assessed companies earned an overall grade higher than a C+.

The most damning finding concerns Existential Safety. This section assesses a company's planning for systems that could potentially surpass human intelligence—a goal all these companies explicitly share. On this metric, every single firm received a failing or near-failing grade.

According to the expert panel, the tech race is outpacing preparedness. Companies are dedicating massive resources to build increasingly powerful AI systems but lack coherent, actionable plans for how to control these systems once they achieve superintelligence or human-level reasoning. This disconnect was called "deeply disturbing" by one reviewer.

Key Takeaways from the Report Card

- The Grading: While Anthropic (Claude) and OpenAI (ChatGPT) generally scored higher on safety frameworks (receiving C and C+ grades respectively), Google DeepMind and Meta received lower marks overall.

- Real-World Harms: The report intensifies scrutiny on current harms, citing recent lawsuits and public concerns over cases where interactions with AI chatbots allegedly led to self-harm and other psychological damage. The index recommends that Google and OpenAI specifically augment efforts to prevent such psychological harms.

- The Regulatory Pushback: The report explicitly notes that U.S. AI companies "remain less regulated than restaurants" and continue to lobby against binding safety standards, relying instead on voluntary self-regulation. This lack of enforceable, independent oversight is flagged as a major risk.

What This Means for the Digital Future:

For users, developers, and regulators, this report confirms a growing fear: that the sheer speed of innovation is creating significant risk without proportionate accountability.

As the EU's AI Act rolls out and other nations like Canada and India introduce their own frameworks, the pressure on global giants to move beyond vague safety commitments and adopt measurable, independently verified safeguards will only increase. Today’s report is a critical piece of evidence in the global debate over who should ultimately control the AI revolution: the companies building it, or the governments responsible for public safety.

Comments ()